After spending a day reading the EU Digital Services Act — a task he wouldn’t wish on his worst enemy — Murray concludes it is not why the Telegram CEO is being detained.

Pavel Durov, CEO and co-founder of Telegram, in 2015. (TechCrunch, Flickr, CC BY 2.0)

By Craig Murray

CraigMurray.org.uk

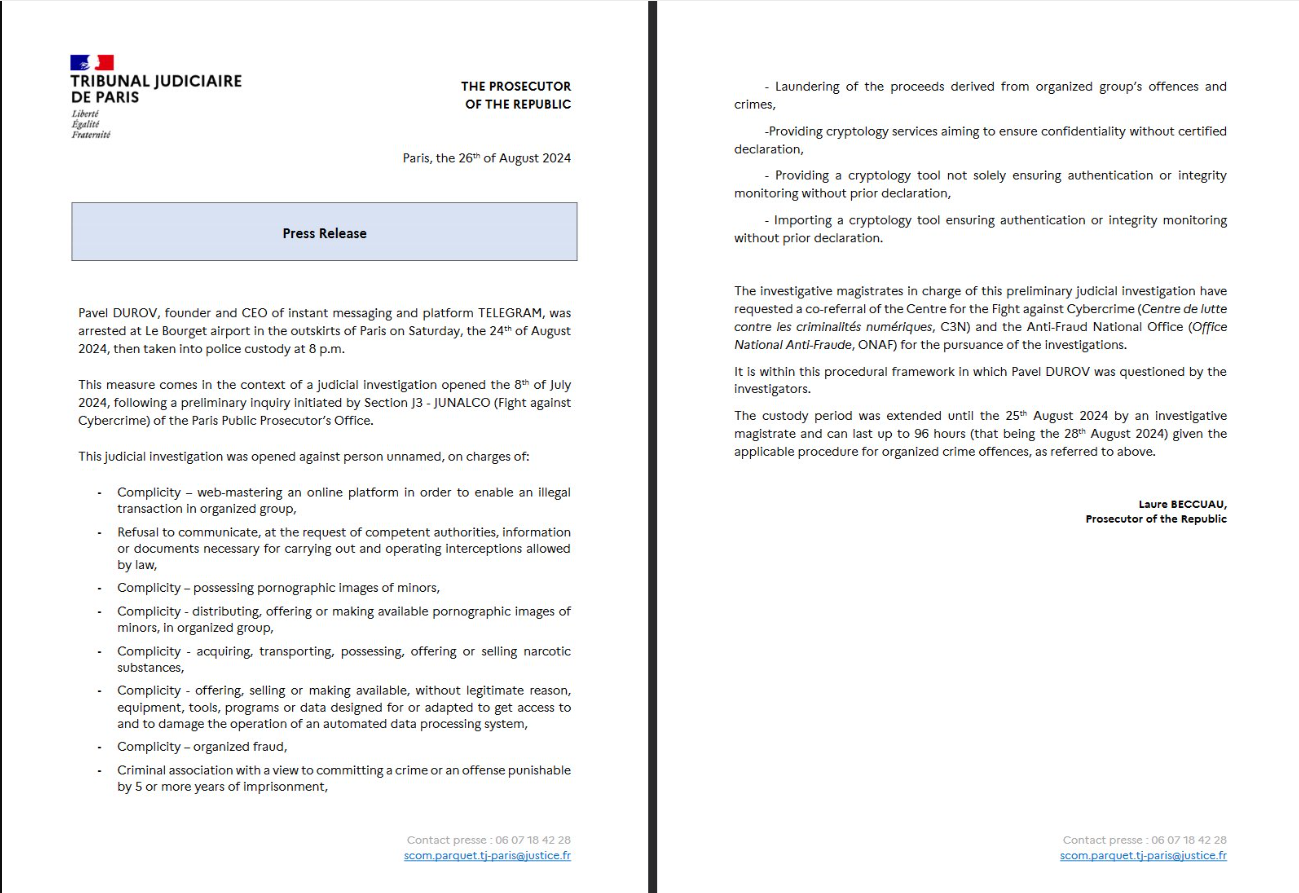

The detention of Pavel Durov is being portrayed as a result of the EU Digital Services Act. But having spent my day reading the EU Services Act (a task I would not wish upon my worst enemy), it does not appear to me to say what it is being portrayed as saying.

The detention of Pavel Durov is being portrayed as a result of the EU Digital Services Act. But having spent my day reading the EU Services Act (a task I would not wish upon my worst enemy), it does not appear to me to say what it is being portrayed as saying.

EU Acts are horribly dense and complex, and are published as “Regulations” and “Articles.” Both cover precisely the same ground, but for purposes of enforcement the more detailed “Regulations” are the more important, and those are referred to below. The “Articles” are entirely consistent with this.

[Durov was formally charged on Wednesday and prevented from leaving France.]

So, for example, Regulation 20 makes the “intermediary service,” in this case Telegram, only responsible for illegal activity using its service if it has deliberately collaborated in the illegal activity.

Providing encryption or anonymity specifically does not qualify as deliberate collaboration in illegal activity.

“(20) Where a provider of intermediary services deliberately collaborates with a recipient of the services in order to undertake illegal activities, the services should not be deemed to have been provided neutrally and the provider should therefore not be able to benefit from the exemptions from liability provided for in this Regulation.

This should be the case, for instance, where the provider offers its service with the main purpose of facilitating illegal activities, for example by making explicit that its purpose is to facilitate illegal activities or that its services are suited for that purpose. The fact alone that a service offers encrypted transmissions or any other system that makes the identification of the user impossible should not in itself qualify as facilitating illegal activities.”

And at para 30, there is specifically no general monitoring obligation on the service provider to police the content. In fact it is very strong that Telegram is under no obligation to take proactive measures.

“(30) Providers of intermediary services should not be, neither de jure, nor de facto, subject to a monitoring obligation with respect to obligations of a general nature. This does not concern monitoring obligations in a specific case and, in particular, does not affect orders by national authorities in accordance with national legislation, in compliance with Union law, as interpreted by the Court of Justice of the European Union, and in accordance with the conditions established in this Regulation.

Nothing in this Regulation should be construed as an imposition of a general monitoring obligation or a general active fact-finding obligation, or as a general obligation for providers to take proactive measures in relation to illegal content.”

The Telegram app on a smartphone screen. (Focal Foto, Flickr, CC BY-NC 2.0)

However, Telegram is obliged to act against specified accounts in relation to an individual order from a national authority concerning specific content. So while it has no general tracking or censorship obligation, it does have to act at the instigation of national authorities over individual content.

“(31) Depending on the legal system of each Member State and the field of law at issue, national judicial or administrative authorities, including law enforcement authorities, may order providers of intermediary services to act against one or more specific items of illegal content or to provide certain specific information. The national laws on the basis of which such orders are issued differ considerably and the orders are increasingly addressed in cross-border situations.

In order to ensure that those orders can be complied with in an effective and efficient manner, in particular in a cross-border context, so that the public authorities concerned can carry out their tasks and the providers are not subject to any disproportionate burdens, without unduly affecting the rights and legitimate interests of any third parties, it is necessary to set certain conditions that those orders should meet and certain complementary requirements relating to the processing of those orders.

Consequently, this Regulation should harmonise only certain specific minimum conditions that such orders should fulfil in order to give rise to the obligation of providers of intermediary services to inform the relevant authorities about the effect given to those orders. Therefore, this Regulation does not provide the legal basis for the issuing of such orders, nor does it regulate their territorial scope or cross-border enforcement.”

The national authorities can demand content is removed, but only for “specific items”:

“51) Having regard to the need to take due account of the fundamental rights guaranteed under the Charter of all parties concerned, any action taken by a provider of hosting services pursuant to receiving a notice should be strictly targeted, in the sense that it should serve to remove or disable access to the specific items of information considered to constitute illegal content, without unduly affecting the freedom of expression and of information of recipients of the service.

Notices should therefore, as a general rule, be directed to the providers of hosting services that can reasonably be expected to have the technical and operational ability to act against such specific items. The providers of hosting services who receive a notice for which they cannot, for technical or operational reasons, remove the specific item of information should inform the person or entity who submitted the notice.”

There are extra obligations for Very Large Online Platforms, which have over 45 million users within the EU. These are not extra monitoring obligations on content, but rather extra obligations to ensure safeguards in the design of their systems:

The Digital Services Act’s rules vary for different online entities to match their role, size and impact in the online system. (European Commission, Wikimedia Commons, CC BY 4.0)

“(79) Very large online platforms and very large online search engines can be used in a way that strongly influences safety online, the shaping of public opinion and discourse, as well as online trade. The way they design their services is generally optimised to benefit their often advertising-driven business models and can cause societal concerns.

Effective regulation and enforcement is necessary in order to effectively identify and mitigate the risks and the societal and economic harm that may arise.

Under this Regulation, providers of very large online platforms and of very large online search engines should therefore assess the systemic risks stemming from the design, functioning and use of their services, as well as from potential misuses by the recipients of the service, and should take appropriate mitigating measures in observance of fundamental rights.

In determining the significance of potential negative effects and impacts, providers should consider the severity of the potential impact and the probability of all such systemic risks. For example, they could assess whether the potential negative impact can affect a large number of persons, its potential irreversibility, or how difficult it is to remedy and restore the situation prevailing prior to the potential impact.

(80) Four categories of systemic risks should be assessed in-depth by the providers of very large online platforms and of very large online search engines. A first category concerns the risks associated with the dissemination of illegal content, such as the dissemination of child sexual abuse material or illegal hate speech or other types of misuse of their services for criminal offences, and the conduct of illegal activities, such as the sale of products or services prohibited by Union or national law, including dangerous or counterfeit products, or illegally-traded animals.

For example, such dissemination or activities may constitute a significant systemic risk where access to illegal content may spread rapidly and widely through accounts with a particularly wide reach or other means of amplification. Providers of very large online platforms and of very large online search engines should assess the risk of dissemination of illegal content irrespective of whether or not the information is also incompatible with their terms and conditions.

This assessment is without prejudice to the personal responsibility of the recipient of the service of very large online platforms or of the owners of websites indexed by very large online search engines for possible illegality of their activity under the applicable law.”

(LIBER Europe, Flickr, CC BY 2.0)

“(81) A second category concerns the actual or foreseeable impact of the service on the exercise of fundamental rights, as protected by the Charter, including but not limited to human dignity, freedom of expression and of information, including media freedom and pluralism, the right to private life, data protection, the right to non-discrimination, the rights of the child and consumer protection.

Such risks may arise, for example, in relation to the design of the algorithmic systems used by the very large online platform or by the very large online search engine or the misuse of their service through the submission of abusive notices or other methods for silencing speech or hampering competition.

When assessing risks to the rights of the child, providers of very large online platforms and of very large online search engines should consider for example how easy it is for minors to understand the design and functioning of the service, as well as how minors can be exposed through their service to content that may impair minors’ health, physical, mental and moral development. Such risks may arise, for example, in relation to the design of online interfaces which intentionally or unintentionally exploit the weaknesses and inexperience of minors or which may cause addictive behaviour.

(82) A third category of risks concerns the actual or foreseeable negative effects on democratic processes, civic discourse and electoral processes, as well as public security.

(83) A fourth category of risks stems from similar concerns relating to the design, functioning or use, including through manipulation, of very large online platforms and of very large online search engines with an actual or foreseeable negative effect on the protection of public health, minors and serious negative consequences to a person’s physical and mental well-being, or on gender-based violence.

Such risks may also stem from coordinated disinformation campaigns related to public health, or from online interface design that may stimulate behavioural addictions of recipients of the service.

(84) When assessing such systemic risks, providers of very large online platforms and of very large online search engines should focus on the systems or other elements that may contribute to the risks, including all the algorithmic systems that may be relevant…”

This is very interesting. I would argue that under Article 81 and 84, for example, the blatant use of both algorithms limiting reach and plain blocking by Twitter and Facebook, to promote a pro-Israeli narrative and to limit pro-Palestinian content, was very plainly a breach of the EU Digital Services Directive by deliberate interference with “freedom of expression and information, including media freedom and pluralism.”

The legislation is very plainly drafted with the specific intent of outlawing the use of algorithms to interfere with freedom of speech and public discourse in this way.

But it is of course a great truth that the honesty and neutrality of prosecution services is much more important to what actually happens in any “justice” system than the actual provisions of legislation.

Only a fool would be surprised that the EU Digital Services Act is being shoehorned into use against Durov, apparently for lack of cooperation with Western intelligence services and being a bit Russian, and is not being used against either Elon Musk or Mark Zuckerberg for limiting the reach of pro-Palestinian content.

It is also worth noting that Telegram is not considered to be a very large online platform by the EU Commission who have to date accepted Telegram’s contention that it has less than 45 million users in the EU, so these extra obligations do not apply.

If we look at the charges against Durov in France, I therefore cannot see how they are in fact compatible with the EU Digital Services Act.

Unless he refused to remove or act over specific individual content specified by the French authorities, or unless he set up Telegram with the specific intent of facilitating organised crime, I do not see how Durov is not protected under Articles 20 and 30 and other safeguards found in the Digital Services Act.

The French charges appear however to be extremely general and not to relate to particular specified communications. This is an abuse.

What the Digital Services Act does not contain is a general obligation to hand over unspecified content or encryption keys to police forces or security agencies. It is also remarkably reticent on “misinformation.”

Regulations 82 or 83 above obviously provide some basis for “misinformation” policing, but the Act in general relies on the rather welcome assertion that regulations governing what speech and discourse is legal should be the same offline as online.

So in short, the arrest of Pavel Durov appears to be pretty blatant abuse and only very tenuously connected to the legal basis given as justification. This is simply a part of the current rising wave of authoritarianism in Western “democracies”.

Craig Murray is an author, broadcaster and human rights activist. He was British ambassador to Uzbekistan from August 2002 to October 2004 and rector of the University of Dundee from 2007 to 2010. His coverage is entirely dependent on reader support. Subscriptions to keep this blog going are gratefully received.

This article is from CraigMurray.org.uk.

The views expressed are solely those of the author and may or may not reflect those of Consortium News.

Russia and the EU both want to snoop on Telegram users. But Russia did not arrest Durov. France did. The France of Liberte. The Rule Of Law, Independent Judiciary, Equality Under The Law, Human Rights, Civil Liberties, now just so many sick jokes. Assange, Medhurst, Ritter, Durov, Patrick Lancaster, all subject to selective prosecutions and bureaucratic persecution on trumped up charges. The first of many. And Trump, of course, whatever you think of him. Though they recently tried to give him the full JFK, RFK, MLK treatment. Repressive legislation, stringent censorship, blanket surveillance, blatant intimidation and persecution and suppression of any dissent. We are sliding rapidly into an Orwellian dystopia.

If Durov is charged on these specious grounds, maybe the CEOs of Daimler Benz and BMW should be arrested and prosecuted when their products are used by bank robbers as getaway cars. And the CEOs of Lockheed and Raytheon and British Aerospace most certainly should be when their products are used to murder children in Gaza.

You are 100% correct. I am considered a pariah in my home city of San Francisco, a city that still thinks of itself as “progressive”, when it absolutely is not. Here it is all identity politics and rah-rah for Harris because she ticks all the identity boxes except for being LGBTQ+. But the LGBTQ+ community supports her anyway. She was a lousy DA and a lousy, right-wing, law & order state AG.

I wonder how the world survived this long with the seeming infinite poisoned soup the world’s leadership class have been sucking in to turn them into such traitors to their own people, to justice and right action, much less than simple pure decency, and to their own souls. (Is there just a dark empty space in place of a soul in these people?

Thanks for this article.

I have used it in my class on “Internet and Public Policy” at Georgia Tech’s School of Public Policy.

So, as you noted, the disclosure you share is a dense and boring but all too frequently, critical issues are camouflaged in language designed to dissuade its analysis by making it too dense and too boring to follow, something for which you, gratefully, did not fall. Important article. Thank you.

When I characterized Pavel-gate as a fancier mugging than the three guys on a San Francisco street, after Pavel left a meeting with Jack Dorsey, a person on a job-seeking platform asked if I were insinuating that D-something (four letters) was involved in the San Fracisco incident. I responded that I had no idea what D___ is, so I could not have insinuated its involvement. I asserted to the person responding that if four-letter combinations mean something, it might be helpful if the words are spelled out, as sometimes letter-combinations may have more than one meaning. I only meant to indicate, by my recounting of the street-incident, that persons wanting valuable property of others could use force to take property. That would not mean persons wanting to take property, by force, are all from a same tribe. The person advocating against Pavel on the job-platform, wrote that D-something is the French entity similar to Us central imagination agency. I asked if the plane and phone are now in possession of the D-agency. I have not checked to see if I got an answer yet.

Telegram is arguably the best place anywhere on earth to get the unvarnished truth about: 1.) Israel’s genocidal sadism and disgusting land grabs, and 2.) NATO’s extremely dangerous provocations and deadly proxy attacks against Russians and ethnic Russians.

These are the reasons this young Telegram executive was jailed.

A very useful and informative article. I’m glad I don’t need to read that Act.

Ditto here. Craig Murray is a hero for wading through such muck. Many thanks, Amb. Murray.

What a sickening use of law by petty bureaucrats to harass people who don’t seem to conform. With every step, EU governments keep sinking lower. They act like children throwing a tantrum, “Rights of Man” be damned.

And these same small bureaucrats want to set the future of the EU as a vassal state of the US. Disgusting. Not to mention that vassal states of the US are only one of two things; they are either cannon-fodder, like Ukraine, or they are food, like the EU.

Speaking of US vassalage, it’s even said that the USA was the one who ordered the arrest of Durov.

The EU are all already vassals of the U.S. empire. They tremble at every mindless command given them by the U.S. and its overseas bulldog NATO. Olaf Scholz surrendered his rear end to Biden regarding the destruction of Nord Stream and followed orders. Macron thinks he’s Napoleon, but forgets what happened to Napoleon in Russia (and to Hitler, too). And then there is the Von der Hitler woman. What a nauseating crowd.

From an article in the Guardian 28 August:

“Russia attempted to ban Telegram in 2018, but lifted all restrictions on the platform after Russian authorities stated that Durov was willing to cooperate in fighting terrorism and extremism.

While Durov has at times cast himself as a Russian exile, leaked border data seen by the Guardian showed that he visited the country more than 50 times between 2015 and 2021, leading to renewed speculation over his links to the Kremlin.

Russian officials have framed Durov’s arrest as politically motivated, a claim strongly denied by Emmanuel Macron, the French president.

Questions have also been raised about the timing and circumstances of Durov’s detention, in particular whether he knew that Paris had issued a warrant against him.”

Curiouser and curiouser. But thankyou Mr. Murray for wading through the gobbledygook of european gobbledygook.

Some info that maybe helps to clear at least part of the confusion:

All western services are bound by the law to share their user database with the authorities. Not just Facebook, Google and such but not even seemingly secure options like Signal are exceptions. The powerful like to know what you are up to. Russia isn’t an exception, obviously, this is why they put their hands on Durov’s previous project, VK (the “Russian Facebook”). Hence, came the genius idea of the next project, Telegram, to spread the servers all across the globe, placing them under very different legal entities and jurisdictions, making it practically impossible for any authorities to access them.

About those links to the Kremlin:

Russia isn’t an exception, they can’t access the servers either. Just think about it, Ukrainian officials use Telegram predominantly too, would they do it if Putin was watching? Putin’s request was to ban some channels that they don’t like (like being critical of the state, etc.). And Telegram did it. But they follow these regulations everywhere else too, so one could talk about their “links to the EU”, as e.g. you cannot access the banned Russian channels, like RT and such, via Telegram from the EU. Just like you can’t access anything porn related from Iran and so on, all around the planet, according to the local regulations. But that doesn’t mean the user database isn’t safe and secure still.

And this is their problem. And very likely this is the reason they are trying to pressure him with these ridiculous charges, that the above article covered more than awesome.

Thankyou for that. It was more this part which piqued my curiousity:

“While Durov has at times cast himself as a Russian exile, leaked border data seen by the Guardian showed that he visited the country more than 50 times between 2015 and 2021, leading to renewed speculation over his links to the Kremlin.

Russian officials have framed Durov’s arrest as politically motivated, a claim strongly denied by Emmanuel Macron, the French president.”

The West is a sinking ship already. It is daily destroying its beauty as a result of of misjudment.

As to sinking ships:

“These are the only genuine ideas; the ideas of the shipwrecked. All the rest is rhetoric, posturing, farce. He who does not really feel himself lost, is lost without remission; that is to say, he never finds himself, never comes up against his own reality.”

Ortega y Gasset from “Revolt of the Masses”, 1929