Pentagon officials acknowledge that it will be some time before robot generals are commanding vast numbers of U.S. troops and autonomous weapons in battle, writes Michael T. Klare. But they have several projects to test and perfect it.

U.S. Defense Department officials visit artificial intelligence company Shield AI in San Diego in September 2020. (DoD/Lisa Ferdinando)

By Michael T. Klare

TomDispatch.com

A world in which machines governed by artificial intelligence (AI) systematically replace human beings in most business, industrial, and professional functions is horrifying to imagine.

A world in which machines governed by artificial intelligence (AI) systematically replace human beings in most business, industrial, and professional functions is horrifying to imagine.

After all, as prominent computer scientists have been warning us, AI-governed systems are prone to critical errors and inexplicable “hallucinations,” resulting in potentially catastrophic outcomes.

But there’s an even more dangerous scenario imaginable from the proliferation of super-intelligent machines: the possibility that those nonhuman entities could end up fighting one another, obliterating all human life in the process.

The notion that super-intelligent computers might run amok and slaughter humans has, of course, long been a staple of popular culture. In the prophetic 1983 film WarGames, a supercomputer known as WOPR (for War Operation Plan Response and, not surprisingly, pronounced “whopper”) nearly provokes a catastrophic nuclear war between the United States and the Soviet Union before being disabled by a teenage hacker (played by Matthew Broderick).

The Terminator movie franchise, beginning with the original 1984 film, similarly envisioned a self-aware supercomputer called “Skynet” that, like WOPR, was designed to control U.S. nuclear weapons but chooses instead to wipe out humanity, viewing us as a threat to its existence.

Though once confined to the realm of science fiction, the concept of supercomputers killing humans has now become a distinct possibility in the very real world of the near future. In addition to developing a wide variety of “autonomous,” or robotic combat devices, the major military powers are also rushing to create automated battlefield decision-making systems, or what might be called “robot generals.”

In wars in the not-too-distant future, such AI-powered systems could be deployed to deliver combat orders to American soldiers, dictating where, when, and how they kill enemy troops or take fire from their opponents. In some scenarios, robot decision-makers could even end up exercising control over America’s atomic weapons, potentially allowing them to ignite a nuclear war resulting in humanity’s demise.

2019 Terminator Dark Fate billboard ad in New York. (Brecht Bug, Flickr, CC BY-NC-ND 2.0)

Now, take a breath for a moment. The installation of an AI-powered command-and-control (C2) system like this may seem a distant possibility. Nevertheless, the U.S. Department of Defense is working hard to develop the required hardware and software in a systematic, increasingly rapid fashion.

In its budget submission for 2023, for example, the Air Force requested $231 million to develop the Advanced Battlefield Management System (ABMS), a complex network of sensors and AI-enabled computers designed to collect and interpret data on enemy operations and provide pilots and ground forces with a menu of optimal attack options.

As the technology advances, the system will be capable of sending “fire” instructions directly to “shooters,” largely bypassing human control.

“A machine-to-machine data exchange tool that provides options for deterrence, or for on-ramp [a military show-of-force] or early engagement,” was how Will Roper, assistant secretary of the Air Force for acquisition, technology, and logistics, described the ABMS system in a 2020 interview.

Suggesting that “we do need to change the name” as the system evolves, Roper added, “I think Skynet is out, as much as I would love doing that as a sci-fi thing. I just don’t think we can go there.”

And while he can’t go there, that’s just where the rest of us may, indeed, be going.

Mind you, that’s only the start. In fact, the Air Force’s ABMS is intended to constitute the nucleus of a larger constellation of sensors and computers that will connect all U.S. combat forces, the Joint All-Domain Command-and-Control System (JADC2, pronounced “Jad-C-two”). “JADC2 intends to enable commanders to make better decisions by collecting data from numerous sensors, processing the data using artificial intelligence algorithms to identify targets, then recommending the optimal weapon… to engage the target,” the Congressional Research Service reported in 2022.

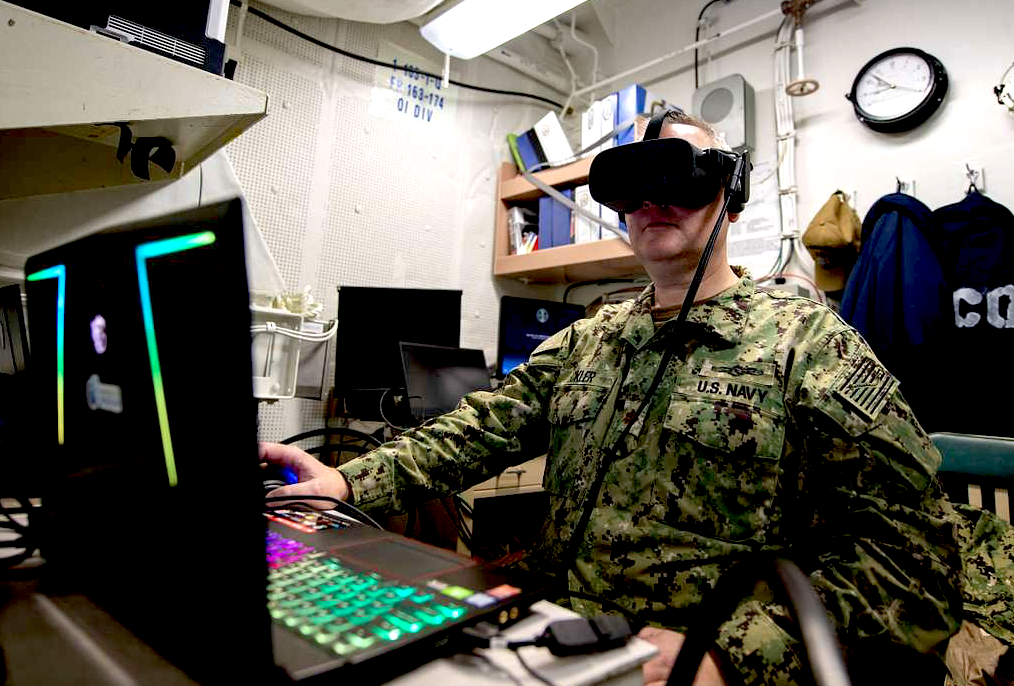

December 2019: Testing Advanced Battle Management Systems equipment aboard the destroyer USS Thomas Hudner as part of a joint force exercise. (Defense Visual Information Distribution Service, Public domain)

AI & the Nuclear Trigger

Initially, JADC2 will be designed to coordinate combat operations among “conventional” or non-nuclear American forces. Eventually, however, it is expected to link up with the Pentagon’s nuclear command-control-and-communications systems (NC3), potentially giving computers significant control over the use of the American nuclear arsenal.

“JADC2 and NC3 are intertwined,” General John E. Hyten, vice chairman of the Joint Chiefs of Staff, indicated in a 2020 interview. As a result, he added in typical Pentagonese, “NC3 has to inform JADC2 and JADC2 has to inform NC3.”

It doesn’t require great imagination to picture a time in the not-too-distant future when a crisis of some sort — say a U.S.-China military clash in the South China Sea or near Taiwan — prompts ever more intense fighting between opposing air and naval forces.

Imagine then the JADC2 ordering the intense bombardment of enemy bases and command systems in China itself, triggering reciprocal attacks on U.S. facilities and a lightning decision by JADC2 to retaliate with tactical nuclear weapons, igniting a long-feared nuclear holocaust.

The possibility that nightmare scenarios of this sort could result in the accidental or unintended onset of nuclear war has long troubled analysts in the arms control community. But the growing automation of military C2 systems has generated anxiety not just among them but among senior national security officials as well.

As early as 2019, when I questioned Lieutenant General Jack Shanahan, then director of the Pentagon’s Joint Artificial Intelligence Center, about such a risky possibility, he responded, “You will find no stronger proponent of integration of AI capabilities writ large into the Department of Defense, but there is one area where I pause, and it has to do with nuclear command and control.”

This “is the ultimate human decision that needs to be made” and so “we have to be very careful.” Given the technology’s “immaturity,” he added, we need “a lot of time to test and evaluate [before applying AI to NC3].”

In the years since, despite such warnings, the Pentagon has been racing ahead with the development of automated C2 systems.

In its budget submission for 2024, the Department of Defense requested $1.4 billion for the JADC2 in order “to transform warfighting capability by delivering information advantage at the speed of relevance across all domains and partners.” Uh-oh! And then, it requested another $1.8 billion for other kinds of military-related AI research.

ABMS exercise, or “onramp,” at Joint Base Andrews, Maryland, September 2020. (USAAF, Daniel Hernandez)

Pentagon officials acknowledge that it will be some time before robot generals will be commanding vast numbers of U.S. troops (and autonomous weapons) in battle, but they have already launched several projects intended to test and perfect just such linkages. One example is the Army’s Project Convergence, involving a series of field exercises designed to validate ABMS and JADC2 component systems.

In a test held in August 2020 at the Yuma Proving Ground in Arizona, for example, the Army used a variety of air- and ground-based sensors to track simulated enemy forces and then process that data using AI-enabled computers at Joint Base Lewis McChord in Washington state.

Those computers, in turn, issued fire instructions to ground-based artillery at Yuma. “This entire sequence was supposedly accomplished within 20 seconds,” the Congressional Research Service later reported.

Less is known about the Navy’s AI equivalent, “Project Overmatch,” as many aspects of its programming have been kept secret. According to Admiral Michael Gilday, chief of naval operations, Overmatch is intended “to enable a Navy that swarms the sea, delivering synchronized lethal and nonlethal effects from near-and-far, every axis, and every domain.” Little else has been revealed about the project.

Admiral Michael Gilday in 2020. (DoD, Lisa Ferdinando)

‘Flash Wars’ & Human Extinction

Despite all the secrecy surrounding these projects, you can think of ABMS, JADC2, Convergence, and Overmatch as building blocks for a future Skynet-like mega-network of super-computers designed to command all U.S. forces, including its nuclear ones, in armed combat.

The more the Pentagon moves in that direction, the closer we’ll come to a time when AI possesses life-or-death power over all American soldiers along with opposing forces and any civilians caught in the crossfire.

Such a prospect should be ample cause for concern. To start with, consider the risk of errors and miscalculations by the algorithms at the heart of such systems. As top computer scientists have warned us, those algorithms are capable of remarkably inexplicable mistakes and, to use the AI term of the moment, “hallucinations” — that is, seemingly reasonable results that are entirely illusionary.

Under the circumstances, it’s not hard to imagine such computers “hallucinating” an imminent enemy attack and launching a war that might otherwise have been avoided.

And that’s not the worst of the dangers to consider. After all, there’s the obvious likelihood that America’s adversaries will similarly equip their forces with robot generals. In other words, future wars are likely to be fought by one set of AI systems against another, both linked to nuclear weaponry, with entirely unpredictable — but potentially catastrophic — results.

Not much is known (from public sources at least) about Russian and Chinese efforts to automate their military command-and-control systems, but both countries are thought to be developing networks comparable to the Pentagon’s JADC2.

As early as 2014, in fact, Russia inaugurated a National Defense Control Center (NDCC) in Moscow, a centralized command post for assessing global threats and initiating whatever military action is deemed necessary, whether of a non-nuclear or nuclear nature.

Like JADC2, the NDCC is designed to collect information on enemy moves from multiple sources and provide senior officers with guidance on possible responses.

China is said to be pursuing an even more elaborate, if similar, enterprise under the rubric of “Multi-Domain Precision Warfare” (MDPW).

According to the Pentagon’s 2022 report on Chinese military developments, its military, the People’s Liberation Army, is being trained and equipped to use AI-enabled sensors and computer networks to “rapidly identify key vulnerabilities in the U.S. operational system and then combine joint forces across domains to launch precision strikes against those vulnerabilities.”

Picture, then, a future war between the U.S. and Russia or China (or both) in which the JADC2 commands all U.S. forces, while Russia’s NDCC and China’s MDPW command those countries’ forces. Consider, as well, that all three systems are likely to experience errors and hallucinations.

How safe will humans be when robot generals decide that it’s time to “win” the war by nuking their enemies?

If this strikes you as an outlandish scenario, think again, at least according to the leadership of the National Security Commission on Artificial Intelligence, a congressionally mandated enterprise that was chaired by Eric Schmidt, former head of Google, and Robert Work, former deputy secretary of defense.

“While the Commission believes that properly designed, tested, and utilized AI-enabled and autonomous weapon systems will bring substantial military and even humanitarian benefit, the unchecked global use of such systems potentially risks unintended conflict escalation and crisis instability,” it affirmed in its Final Report.

Such dangers could arise, it stated, “because of challenging and untested complexities of interaction between AI-enabled and autonomous weapon systems on the battlefield” — when, that is, AI fights AI.

Though this may seem an extreme scenario, it’s entirely possible that opposing AI systems could trigger a catastrophic “flash war” — the military equivalent of a “flash crash” on Wall Street, when huge transactions by super-sophisticated trading algorithms spark panic selling before human operators can restore order.

In the infamous “Flash Crash” of May 6, 2010, computer-driven trading precipitated a 10 percent fall in the stock market’s value. According to Paul Scharre of the Center for a New American Security, who first studied the phenomenon, “the military equivalent of such crises” on Wall Street would arise when the automated command systems of opposing forces “become trapped in a cascade of escalating engagements.”

In such a situation, he noted, “autonomous weapons could lead to accidental death and destruction at catastrophic scales in an instant.”

At present, there are virtually no measures in place to prevent a future catastrophe of this sort or even talks among the major powers to devise such measures. Yet, as the National Security Commission on Artificial Intelligence noted, such crisis-control measures are urgently needed to integrate “automated escalation tripwires” into such systems “that would prevent the automated escalation of conflict.”

Otherwise, some catastrophic version of World War III seems all too possible. Given the dangerous immaturity of such technology and the reluctance of Beijing, Moscow and Washington to impose any restraints on the weaponization of AI, the day when machines could choose to annihilate us might arrive far sooner than we imagine and the extinction of humanity could be the collateral damage of such a future war.

Michael T. Klare, a TomDispatch regular, is the five-college professor emeritus of peace and world security studies at Hampshire College and a senior visiting fellow at the Arms Control Association. He is the author of 15 books, the latest of which is All Hell Breaking Loose: The Pentagon’s Perspective on Climate Change. He is a founder of the Committee for a Sane U.S.-China Policy.

This article is from TomDispatch.com.

The views expressed are solely those of the author and may or may not reflect those of Consortium News.

How would ai have worked the K129 incident to resolution?

How would ai have worked the four submarines with nuke tipped torpedoes between FA and Cuba in Oct of ’62 to resolution?

Why aren’t we seeing live war games to prove their superiority?

After reading that I’d wager that the Holocene will end this century.

Mankind is sleep walking into oblivion.

Some brainwashed people are making sure ‘End of Days’ happens…

Exactly AI is a reflection of humans and its developers are the worst people around the rich1% elites…

” And death shall have no dominion…”

That’s because we, along with all LIFE on the planet, will be dead.

Do you know the two most Christian countries in the world have the most atomic weapons . And some how they seem most opposed to each other . Why . It seems rather strange that christians should hate fellow christians the most So i agree military intelligence must be artificial intelligence . what fools we are , christianity preaches peace , why don’t we try christian intelligence .

Doesn’t Russia already have such a system in place for general nuclear war? I believe Perimiter is a pattern recognition system designed to launch all of their nukes (in the direction of Europe and the US) in the event of a decapitating strike on Moscow. Cheers!

(1 comment! holy shite)

Algorithms “capable of remarkably inexplicable mistakes”

(according to computer experts… oh don’t worry, we’ll pay our own experts to refute this)… place the proverbial “what could possibly go wrong?” in this space … it will all become known in the end when…

Delivering info advantage at the “speed of relevance across all domains & partners” happens … Congratulations! You win the crackpot jackpot! … It’s like hedge betting at the speed of insanity … Will you spend your filthy lucre instantaneously or at least fractions of a second before your enemy does?

Per the pic of the Admiral: You’re right. I can’t handle the truth. Because it’s too feckin’ nuts … unless you’re totally into Armageddon, which I don’t discount for a moment (and which is apparently what you’re “gedding” these new “arms” for) …

“And the night comes again to the circle studded sky

The stars settle slowly, in loneliness they lie

‘Till the universe explodes as a falling star is raised

Planets are paralyzed, mountains are amazed

But they all glow brighter from the brilliance of the blaze

With the speed of insanity, then he dies.”

Phil Ochs from “Crucifixion” c.1965

Seriously? Humans are creating AI in their own image – so how about ‘The Military Dangers of Humans’? Just take a gander at our world today AI isn’t the problem (yet), humans are…

“Humans are creating AI in their own image”

So there might be something to that “god created man in his own image” thing after all. And then it will progress to AI creates “something” in its own image. Until we are left with nothing but images. But yes Susan, humans are the problem, i agree.

Thanks to Michael Klare and CN for publishing this informative and terrifying essay. Technology is a useful servant but a terrible master. We are in the midst of finding out just how terrible that can be.

Every technological advance that humans have come up with we have turned into a weapon. No doubt we will do this with AI too. We were able to restrain ourselves with atomic energy because nuclear weapons are hard to do. Not so with AI. Every country will have an AI assisted military and there will be an inevitable arms race. Our future doesn’t look good.

“After all, as prominent computer scientists have been warning us, AI-governed systems are prone to critical errors and inexplicable “hallucinations,” resulting in potentially catastrophic outcomes.”

Due to “artificial intelligence” never existing nor “magic bullets” but will likely keep some “profitably involved” in the oxymoron “constant rectifications”.

Well, yet another catastrophe to end all catastrophes. If it’s not UFO’s, it’s the final adventure of human intelligence neutered into pure mendacity. With all these doomsday scenarios, it is hard to imagine that doomsday scenarios weren’t the point of it all. I mean, what will power all these AI devices? Where will these “soldiers” who aren’t AI come from who must await directions from AI? There are no more soldiers awaiting the chance to fight wars on the level assumed by this narrative. And AI soldiers themselves have nothing to do with national borders. They would have no loyalty whatsoever for any palpable reason, or else the scenarios painted here (and elsewhere, continuously) can’t occur. Maybe only global corporate power can save us from these AI marauders. Maybe only global corporate power can create them. Imagine the names we can call these AI bandits. As usual, hatred could create a new equality. I think it may be time to stop talking about what could happen, and start talking about doing something to make something else happen: something which doesn’t need robots or corporate welfare or perpetuated injury to the majority of people on earth who aren’t robots and aren’t making them.

How about we replace competition among nations with cooperation instead. No more need for all this insane expenditure on more technologically sophisticated weaponry that will in all likelihood, end life on earth. The current trajectory is just plain nuts.

Lois, that’s a wonderful idea, but i think we know that’s not about to happen. However, your last sentence reminded me of a Gary Larson cartoon from the Far Side, in which a patient is lying on the psychiatrist’s couch, apparently babbling and the pyschiatrist has written on his notes “just plain nuts”. We seem to have a collective “just plain nuts” approach to our future.

One wonders whether each AI system will speak a different language that will automatically and totally understand the adversary? Very unlikely no matter what numbers are used.

“AI-governed systems are prone to critical errors and inexplicable “hallucinations,” resulting in potentially catastrophic outcomes.”

I’d say natural human political systems are much more prone to this. Indeed it has become the norm.

Will our future end up looking like “The Terminator,” “The Matrix” / “The Second Renaissance” (from “Animatrix”), or “Colossus: The Forbin Project” (the latter of the three is less well-known and the oldest of those, but is more or less what would happen if WOPR / “Joshua” from “WarGames” did not need any human convincing that “the only winning move is not to play,” formed a hive-mind with the Soviet/Russia-based equivalent supercomputer, and took matters directly into its own hands)?

Hopefully, the outcome will be more banal than the ones depicted in those stories, but odds are it will be very problematic regardless.

From the article:

“the day when machines could choose to annihilate us might arrive far sooner than we imagine and the extinction of humanity could be the collateral damage of such a future war.”

I think we’re doing quite adequately on that score with climate breakdown. By the time these “robot generals” appear, we could already have a “scorched earth”.

This will work about as well as all the “self-driving cars” we see starting to roll out in Frisco, Vegas and elsewhere.

I have to laugh Drew. I have no idea about “self-driving” cars or how they work, but i imagine no-one sitting in the driver’s seat or else that would defeat the object. So i wonder if there is a manequin or blow-up doll or robot or something in that seat to replace the human? LOL but i honestly don’t know a thing about them.

I don’t know much about them either, but I’ve been mildly following news stories that document some mayhem they’ve been causing in certain cities. God help us.

“Mayhem”. I like that. It might come to a point that all the self-driving cars turn into “Christines” (á la Stephen King) and kill everyone before AI has a chance to get off the ground. Oh the irony.

Yes Drew, the Groucho Marx joke about “military intelligence” being a contradiction in terms has been updated to Artificial Intelligence. As far as I can see the intelligence of the War Party is totally artificial. There’s no genuine intelligence at work anywhere.

Ha! Right.